Deep Learning for Text Detection (Part2)

This article is the second part of “Deep learning for text detection.” In part 1, we outlined some deep learning approaches based on object detection used to perform text detection.

In this part, we will outline some approaches based on image segmentation.

There are different types of image segmentation, but for text detection, we will focus on instance segmentation.

Instance segmentation

Instance segmentation is the process of classifying each pixel in an image to one of N categories. Moreover, there also needs to be a distinction between pixels belonging to the same class but to different objects (we need to distinguish between a pixel belonging to person A and a pixel belonging to person B in the same image).

Instance segmentation, just like object detection, is a general-purpose method that can be used for several tasks, not just text detection. In the following sections, we’re going to look at some of the deep learning architectures that perform text detection using instance segmentation.

Two famous instance segmentation techniques for text detection are based on Fully convolutional networks (FCN). We will detail them next.

Multi-Oriented Text Detection with Fully Convolutional Networks

FCN is an architecture that is based mainly on convolution layers and without the use of fully connected layers. It is usually used as the first block of many computer vision deep learning models. For example, VGG16 or InceptionV4 have FCN blocks that come right after the input and just before dense layers.

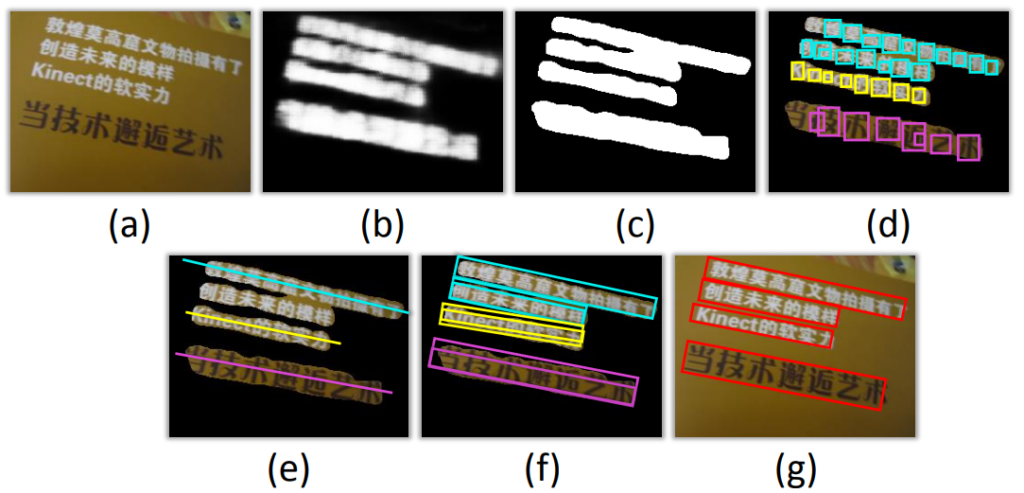

For text detection, Zheng et al in their paper “Multi-Oriented Text Detection with Fully Convolutional Networks” [1] described an approach for text detection using FCNs. The system they designed is composed of several components, but the main component is the FCN block. The figure below shows how text detection works using their method.

Figure 1: Text detection using FCN [1]

In figure 1, there are seven images that show the progress of the text detection process:

- The input image.

- The salient map of the text regions predicted by the TextBlock FCN.

- Text block generation using the previous layer’s output.

- Candidate character component extraction.

- Orientation estimation by component projection.

- Text line candidates extraction.

- The detection results of the proposed method.

TextSnake for text detection

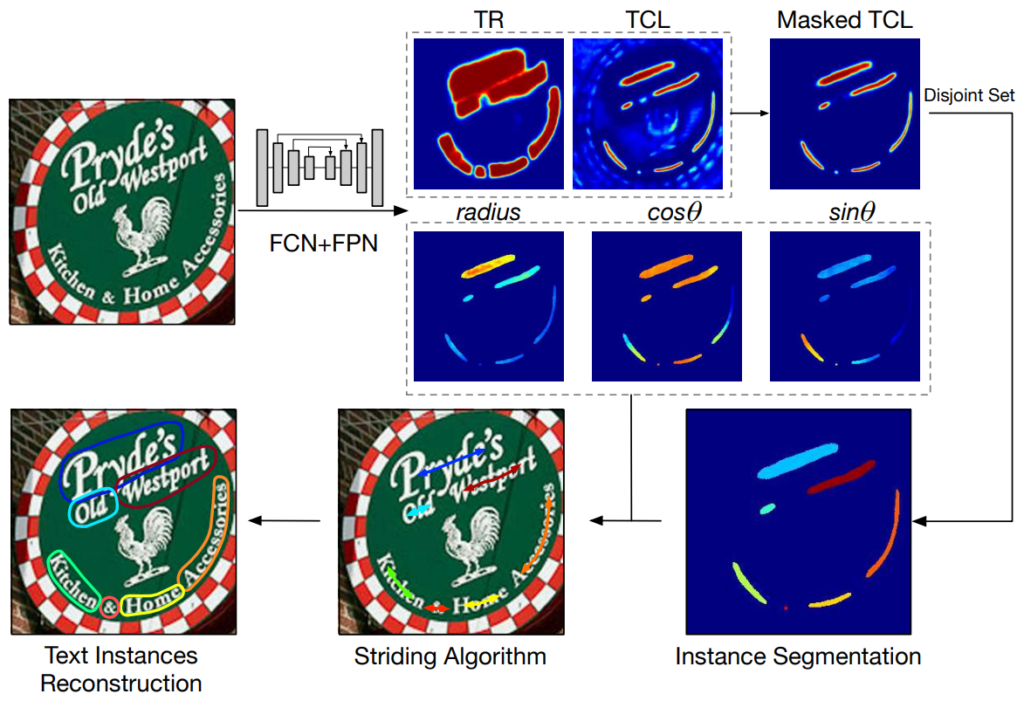

TextSnake is a neural network architecture that is also based on FCN but unlike the approach mentioned in the previous section, TextSnake has a more flexible representation that allows for detecting text of arbitrary shapes. The way this approach works is as shown in the figure below.

TextSnake framework [2]

TextSnake starts with an input image. Then this image is passed through an encoder-decoder network that has an FCN-FPN based architecture. FPN stands for Feature Pyramid Network [3]. The output of this are score maps of text center line (TCL), text regions (TR), and also geometry attributes (radius and rotation angle). The TCL is further masked by the TR map. Furthermore, some post-processing is done to reconstruct the text instances.

Conclusion

In this article, we covered some deep learning techniques that use instance segmentation to perform text detection. We mainly looked at FCN based neural networks such as TextSnake. This would be the second and last part of the “Deep learning for text detection” blog articles. In future articles, we will get into the second major part of an OCR system, which is text recognition.

Cheers!

References:

[1] Zheng Zhang, Chengquan Zhang, Wei Shen, Cong Yao, Wenyu Liu, Xiang Bai, “Multi-Oriented Text Detection with Fully Convolutional Networks.”

[2] Shangbang Long, Jiaqiang Ruan, Wenjie Zhang, Xin He, Wenhao Wu, Cong Yao, “TextSnake: A Flexible Representation for Detecting Text of Arbitrary Shapes.”

[3] Lin, T.Y., Dollar, P., Girshick, R., He, K., Hariharan, B., Belongie, S. “Feature pyramid networks for object detection.”

As CEO, Jonathan defines the company’s vision and strategic goals, bolsters the team culture, and steers product direction. When he’s not working, he enjoys being a dad, photography, and soccer.

Tags: