Deep Learning for Text Recognition

Text recognition machine learning is transforming OCR (optical character recognition) by using advanced algorithms to improve accuracy and speed.

In the previous articles of our series, we covered some of the deep learning techniques used in text detection, which is the first part of any OCR system. In this article, we will cover the second part that composes any OCR system, that is, text recognition.

Text recognition without text segmentation

Text recognition is the process of taking a region of an image that contains text as an input, and at the output, we want to get the text written on that image. Deep learning is very suitable for such processes.

Before deep learning, there was a lot of preprocessing and postprocessing needed in order to recognize text in images. With deep learning, a large portion of the process has been simplified because neural networks can learn some powerful features about the text inside the image. These features are then used by the same neural network to extract the text written on the image.

Special deep learning layers for text recognition

To perform text recognition using deep learning, we need to use some very specific types of layers. Namely: recurrent layers.

There are also transformers, but we will not cover them for now. Maybe we will detail them in a future article.

Recurrent layers are used in CRNNs (Convolutional Recurrent Neural Networks). There are mainly two types of these layers: LSTM (Long Short-Term Memory) and GRU (Gated Recurrent Unit).

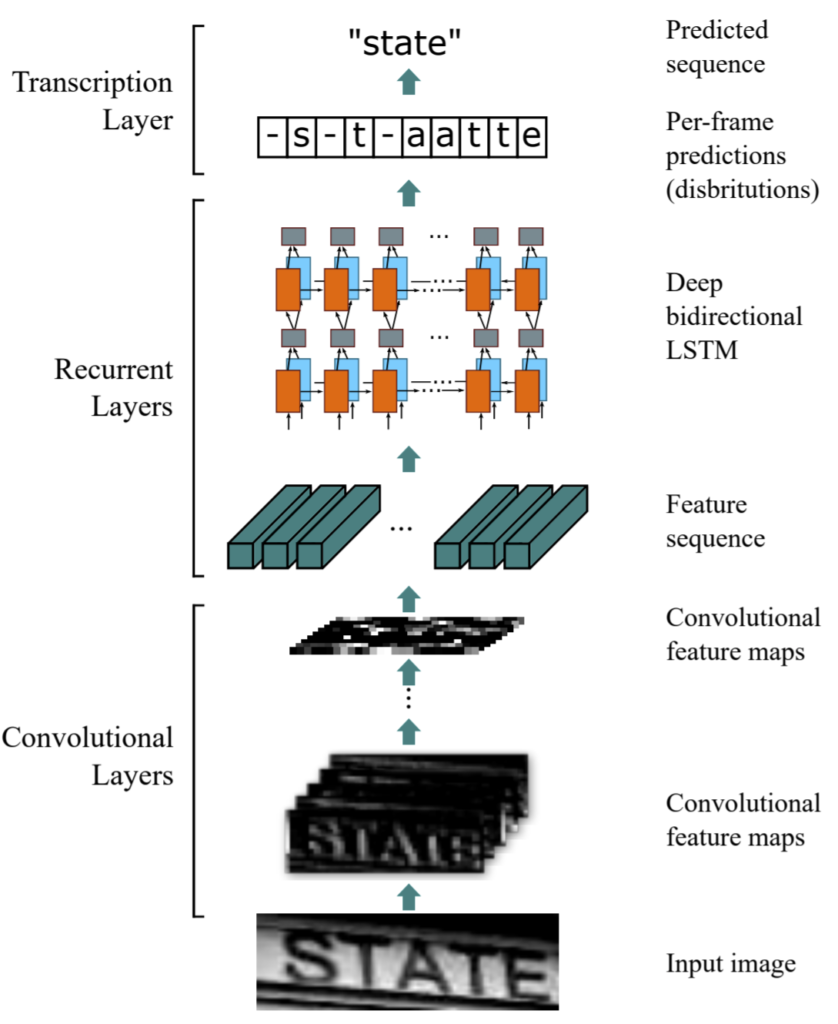

A neural network architecture used for performing text recognition will generally be composed of two parts: a convolutional feature extractor and a recurrent decoder.

As in many computer vision tasks, we use convolutional layers to extract features from images. The outputs of these layers are feature maps. These feature maps are then used as an input to recurrent layers such as LSTM.

In fact, LSTM is widely used in NLP (Natural Language Processing). They are shown to be effective in learning recurrent patterns such as the ones we find in text expressions. So it’s no surprise that they are effective at learning from features that were extracted from images that contain text.

An example implementation of such a text recognition system where there are convolutional layers followed by recurrent layers is shown in the figure below.

Architecture of CRNN [1]

Other important concepts for text recognition machine learning

Two other concepts are important when implementing such a system: Bidirectional layers and CTC loss.

1. Bidirectional layers

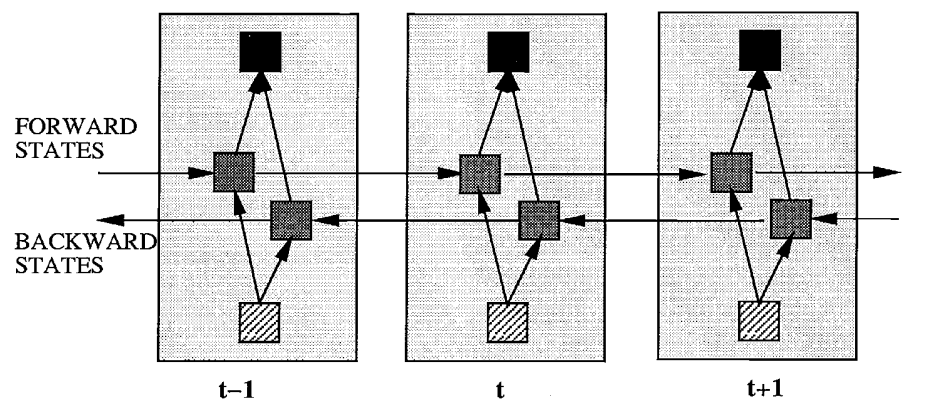

Bidirectional layers are layers that can encompass two hidden layers, in our case, two hidden recurrent layers. This is done so that our system can “look” at the inputs from both time directions: forward and backward. This is very useful in the case of text recognition because the network will be able to “see” the letters (or words) that come before and after the current letter (or word). In the figure below, you can see an example of a Bidirectional layer.

Bidirectional layer of three steps [2]

2. CTC loss

CTC stands for Connectionist Temporal Classification [3]. It is a loss function that’s used in many deep learning applications such as text recognition and also audio applications. For text recognition, the neural network needs to be able to take both the image and label (the text written on the image) as inputs. This is necessary during the training process since we want our neural network to learn a mapping between the image and the text written on it. The CTC loss takes into account these two inputs.

At test time, only the image is used as input.

Conclusion

In this article, we covered how deep learning is used for text recognition. There are many important concepts that need to be used in order to formulate text recognition as a deep learning problem, namely: recurrent layers, bidirectional layers, and CTC loss.

This is the fourth and last article of our series, “Deep learning for OCR.” If you’re interested in our other articles about the same topic, then check out our previous blog articles.

Cheers!

Nour Islam Mokhtari. Guest Author, Machine Learning Engineer

References:

[1] Baoguang Shi, Xiang Bai, Cong Yao, “An End-to-End Trainable Neural Network for Image-based Sequence Recognition and Its Application to Scene Text Recognition.”

[2] Mike Schuster and Kuldip K. Paliwal, “Bidirectional Recurrent Neural Networks.”

[3] Alex Graves, Santiago Fernandez, Faustino Gomez, Jürgen Schmidhuber1, “Connectionist Temporal Classification: Labelling Unsegmented Sequence Data with Recurrent Neural Networks.”

How to Get Started

Integrating GDPicture into your applications is quick and easy. For a customized evaluation and demo, please contact our team of experts, and we will guide you properly for your use-case and requirements.

Alternatively, you can also download it for free.

As CEO, Jonathan defines the company’s vision and strategic goals, bolsters the team culture, and steers product direction. When he’s not working, he enjoys being a dad, photography, and soccer.

Tags: